Reading data

From local files, databases, cloud-hosted data stores, and beyond, if you can read your data into Python, you can also read it into Shiny. Here we’ll highlight some of our recommended ways to read data, which can be grouped into two main categories: eager and lazy loading.

By eager, we mean loading all your data into memory when the app first starts. By lazy, we mean loading portions of data into memory as needed. The eager approach is generally recommended for small to moderately sized datasets – for larger data, lazy loading may be necessary, but it will add complexity to your app logic.

Eager loading

Loading all your data into memory when your app first starts is the simplest way to work with data in Shiny. This makes it easy to create UI from data, and more generally reason about your app’s data logic. However, this also means that before a user can interact with your app, the data must finish being loaded into memory, so be mindful to keep this step as fast and efficient as possible (or consider lazy loading).

From a file

If your data lives in a file (e.g., CSV, Parquet, etc.), you can read it into memory using a variety of tools. Popular libraries for this include Polars, DuckDB, and Pandas:

Polars is a fantastic library that makes data manipulation fast and intuitive. We recommend starting here for most data analysis tasks in Shiny apps.

import polars as pl

dat = pl.read_csv(Path(__file__).parent / "my_data.csv")DuckDB is a fast analytical database system. It’s a great choice to quickly import data into memory.

import duckdb

from pathlib import Path

dat = duckdb.read_csv(Path(__file__).parent / "my_data.csv")Pandas is a widely used data manipulation library in Python. While it may not be as fast and ergonomic as Polars for large datasets, it is still a solid choice for many apps.

import pandas as pd

from pathlib import Path

dat = pd.read_csv(Path(__file__).parent / "my_data.csv")From a database

If your data lives in a database, reading it into memory can be done using similar tools like Polars. Most databases have a connection interface via SQLAlchemy, which has integration with packages like Polars (and Pandas):

import polars as pl

dat = pl.read_database_uri(

"SELECT * FROM tablename",

"postgresql://user:password@hostname/database_name"

)See Polars and SQLAlchemy’s docs for more info, like connecting to other database types.

pl.read_database_uri() opens a connection to the database, runs the provided query, and then closes the connection. If you need to read multiple tables, consider using a database-centric library like Ibis to manage the connection and queries more effectively. We’ll cover this (explicitly opening/closing connections) more in the lazy loading section below.

From the cloud

Polars can read Parquet, CSV, IPC, and NDJSON files directly from cloud storage providers such as AWS, Azure, and Google Cloud Storage.

Depending on the provider, you will have to set up the appropriate credentialing. Once you’ve done so, reading from the cloud is just like reading from a file on disk.

import polars as pl

dat = pl.read_parquet("s3://bucket/yourfile.parquet")For more, see Polars’ cloud storage documentation.

Performance tips

When eagerly reading data into memory, it’s important to consider the performance implications. Here are some tips to help keep your app fast and responsive:

Express

When in Express mode (i.e., using shiny.express), we highly recommended reading data (or other expensive setup code) in a separate module, then importing it into your app.py file. This ensures the data is loaded only once, improving performance. For more details, refer to the Express documentation here.

setup.py

import polars as pl

from pathlib import Path

dat = pl.read_csv(Path(__file__).parent / "my_data.csv")app.py

from shiny.express import render

from setup import dat

@render.data_frame

def df():

return datLarge data

If you have larger data, eagerly reading all of it into memory may not be feasible, so you may need a smarter approach than loading it all into memory when your app first starts. The next section covers some more advanced techniques for lazily loading data into memory as needed.

However, before reaching for lazy loading (which will add complexity to your app), consider the following optimizations for reading data into memory:

- File format: Use efficient file formats like Parquet instead of CSV for large datasets.

- ETL process: Preprocess and clean your data before loading it into your app to reduce size and complexity.

- Database optimization: If reading from a database, ensure it’s optimized for analytical queries (e.g., using indexes, partitioning, etc.).

Lazy reading

Some datasets are too costly to read into memory when an app first loads. In this situation, we can leverage tools to lazily load data into memory as needed, helping keep our app fast and responsive.

From a file

A fantastic tool for lazily reading data from a file is Polars’ Lazy API. This way, you can write data manipulations and only perform them when you need to .collect() results. This looks something roughly like this:

import polars as pl

from shiny.express import render

from pathlib import Path

# Executes instantly (it doesn't load the data into memory)

dat = pl.scan_parquet(Path(__file__).parent / "test_data.parquet")

@render.data_frame

def df():

# Express manipulations and .collect() only when needed

return dat.head(100).collect()Polars also supports lazy reading from cloud storage. This is very helpful for limiting how much information is downloaded to memory, especially if you only need a small portion of a much larger dataset for your application (e.g., You have data from 1900-present but you only need 2000-present.)

import polars as pl

source = "s3://bucket/yourfile.parquet"

dat = pl.scan_parquet(source).filter("your filter here").collect()If you have a choice, we recommend using Polars’ Lazy API over running queries against a database, as it is often simpler and faster. That said, there are certainly cases where a database is more appropriate or necessary.

From a database

Some fantastic tools for lazy loading data from a database are Ibis and SQLAlchemy. With these tools, you can connect to practically any database and express data manipulations in Python (or SQL with SQLAlchemy). Your data manipulations are only executed when you call .execute(), similar to Polars’ .collect().

When using these tools, it’s important to explicitly open and close the database connection to avoid connection leaks.

Here’s an example using Ibis with a PostgreSQL database:

pip install 'ibis-framework[postgres]'import ibis

from shiny.express import render, session

# Connect to the database (nearly instant)

con = ibis.postgres.connect(

user="username",

password="password",

host="hostname",

port=5432,

database="database",

)

dat = con.table("tablename")

# Cleanup connection when the session ends

_ = session.on_ended(con.disconnect)

@render.data_frame

def df():

# Perform manipulations and .execute() only when needed

return dat.head(100).execute()If you prefer to write SQL more directly, use SQLAlchemy to connect to your database and execute SQL queries. This approach provides more flexibility but requires more manual handling of SQL queries.

Show example code

from shiny.express import render

from sqlalchemy import create_engine, text

engine = create_engine('postgresql://user:password@hostname/database_name')

with engine.connect() as conn:

dat = conn.execute(text("SELECT * FROM tablename LIMIT 100"))A real example

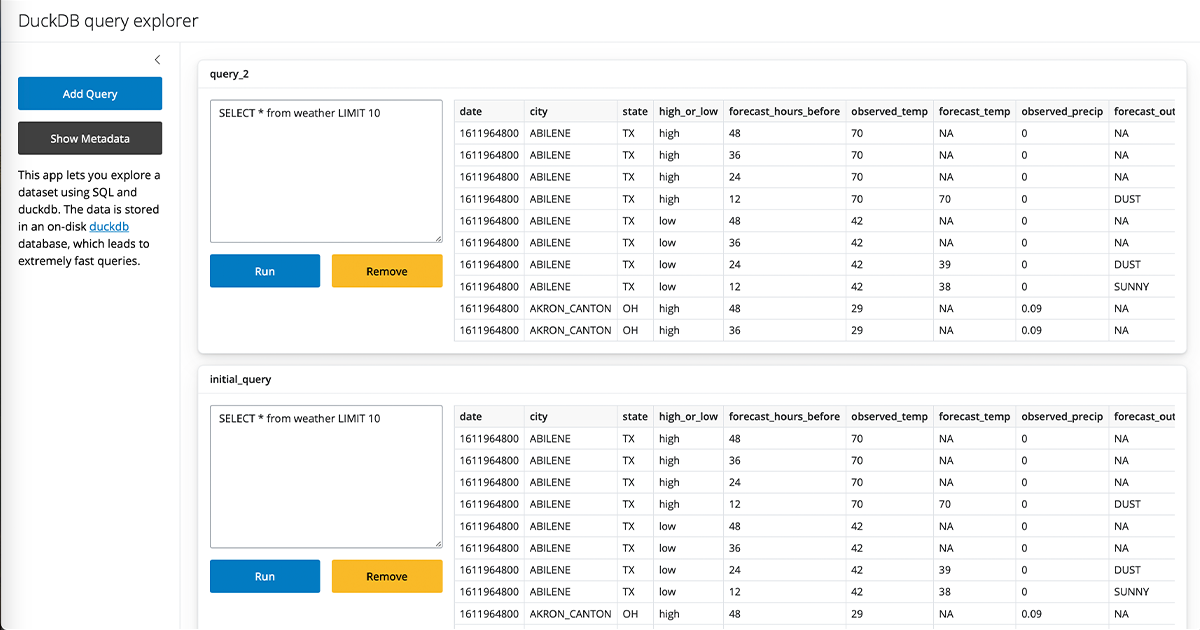

Let’s look at an example with the The Weather Dataset. This data is fairly large (nearly 28M rows), but by loading it lazily, we can keep our app responsive.

import polars as pl

from shiny import reactive

from shiny.express import input, render, ui

# Use `scan_*` instead of `read_*` to use the lazy API

dat = pl.scan_parquet("./daily_weather.parquet")

with ui.sidebar():

ui.input_checkbox_group(

"season",

"Season",

choices=["Summer", "Winter", "Fall", "Spring"],

selected="Summer",

)

# Import just the unique city names for our selectize input

cities = dat.select("city_name").unique().collect().to_series().to_list()

ui.input_selectize("city", "City", choices=cities)

# Store manipulation in a reactive calc

# (convenient for writing once and using in multiple places)

@reactive.calc

def filtered_dat():

return dat.filter(pl.col("city_name") == input.city()).filter(

pl.col("season").is_in(input.season())

)

# Display the filtered data

@render.data_frame

def results_df():

return filtered_dat().collect()import ibis

from ibis import _

from shiny.express import ui, render, input, session

from shiny import reactive

# Connect to the database (quick, doesn't load data)

con = ibis.postgres.connect(

user="", password="", host="", port=, database=""

)

dat = con.table("weather")

end_session = session.on_ended(con.disconnect)

with ui.sidebar():

ui.input_checkbox_group(

"season",

"Season",

choices=["Summer", "Winter", "Autumn", "Spring"],

selected="Summer",

)

# Import just the unique city names for our selectize input

cities = dat.select("city_name").distinct().execute()["city_name"].to_list()

ui.input_selectize("city", "City", choices=cities)

# Store data manipulations in a reactive calculation

# (convenient when using the data in multiple places)

@reactive.calc

def filtered_dat():

return dat.filter(

[_.city_name == input.city(), _.season.isin(input.season())]

)

# Display the filtered data

@render.data_frame

def results_df():

return filtered_dat().execute()Examples

We have numerous full examples and starter templates of dashboards using different datastores. We’ve sorted them into categories roughly corresponding to the tech stack.

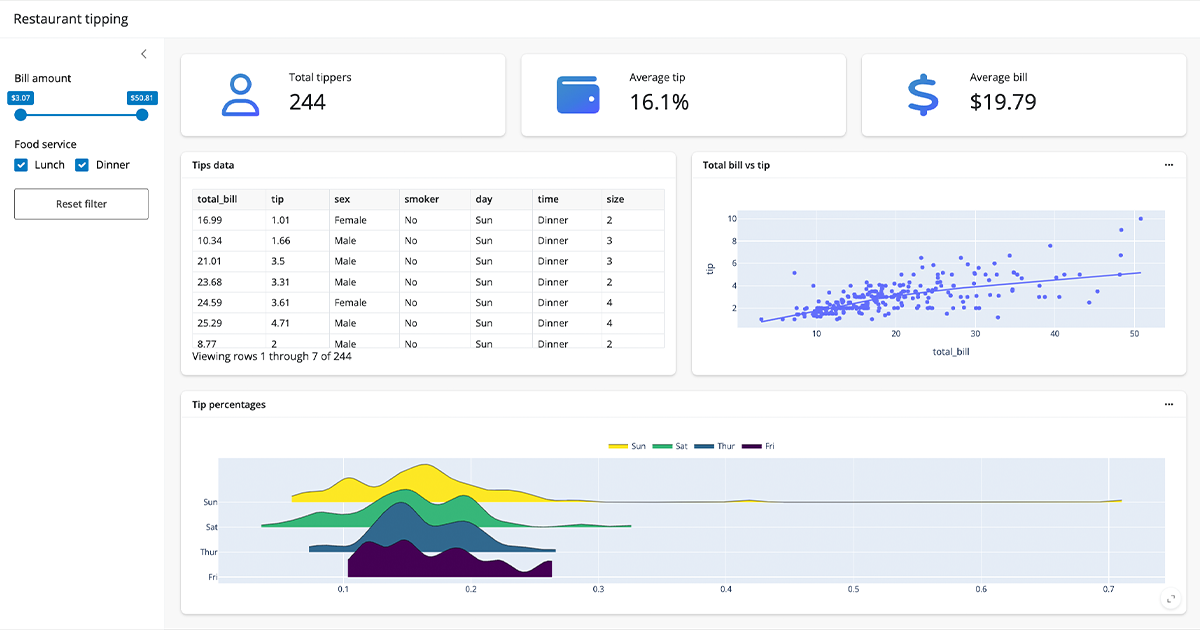

Restaurant Tips Dashboard Template

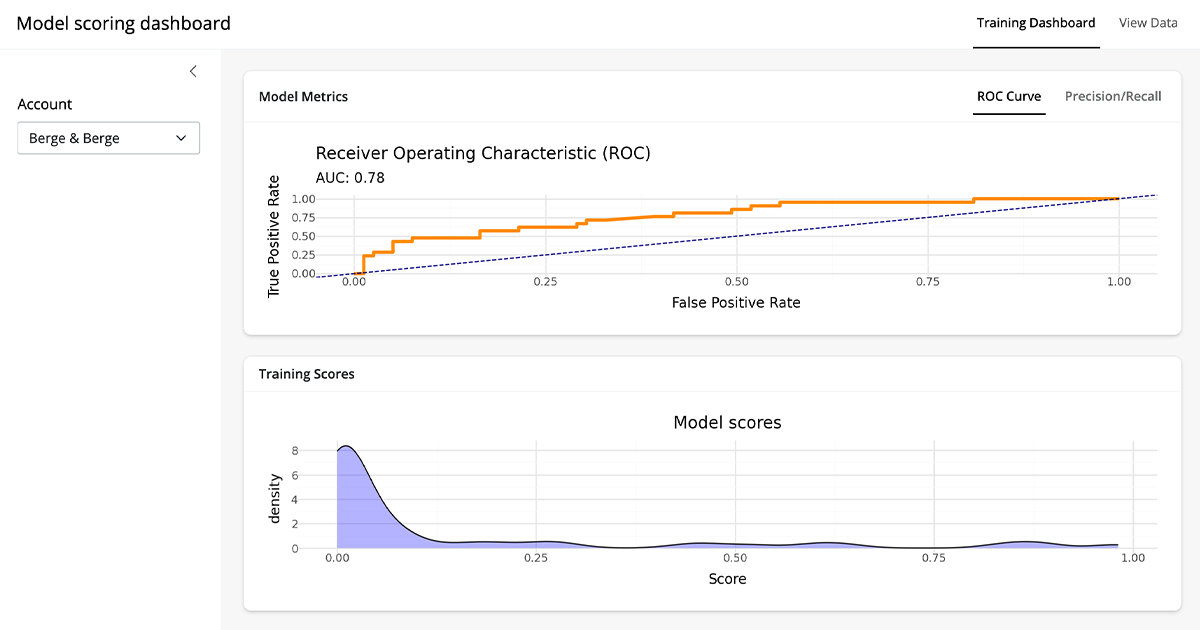

Model Scoring Dashboard Template

Query Explorer Template

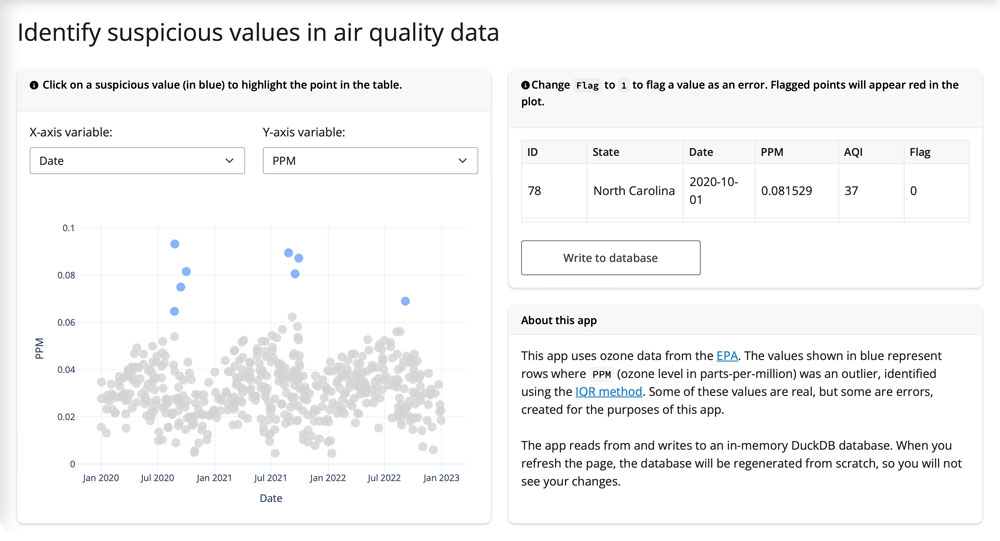

Identify Outliers App

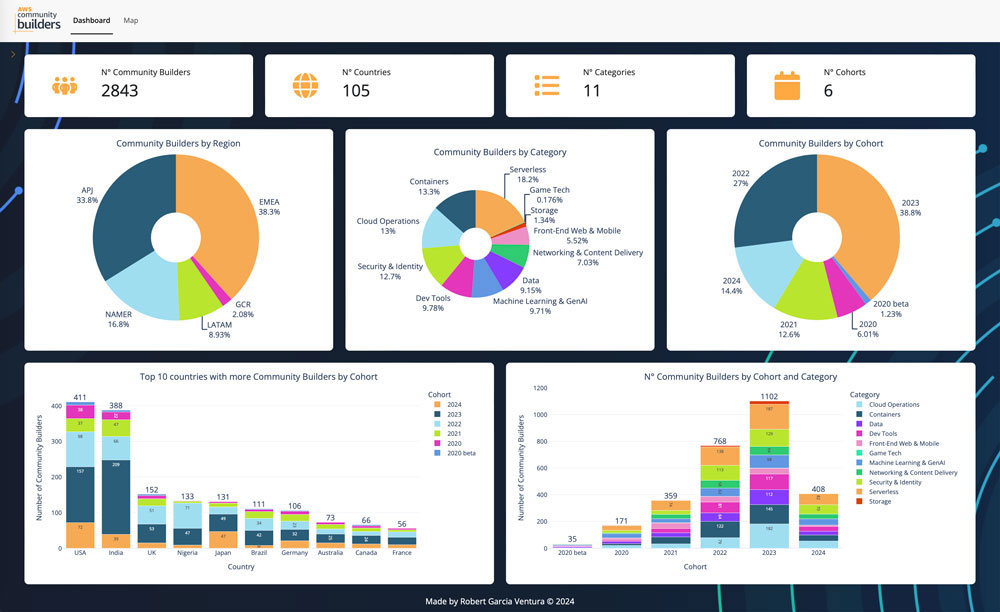

AWS Community Builders App

Reactive reading

In some cases, you may need your app to occasionally re-read an updated data source while the app is running. This is particularly important in scenarios where multiple users may be writing to the database 1 and/or there may be automated processes updating your data. While, this is a more advanced use case, Shiny provides tools to help with this scenario.

From a file

If your data lives in a local file, use reactive.file_reader() to monitor the file for changes and re-read it when it changes. By default, reactive.file_reader() checks the file’s modification time every second, but you can adjust this with the interval argument.

import pathlib

import polars as pl

from shiny import reactive

from shiny.express import render

file = pathlib.Path(__file__).parent / "mtcars.csv"

@reactive.file_reader(file)

def read_file():

return pl.read_csv(file)

@render.data_frame

def result():

return read_file()From a database

If your data lives in a database, use reactive.poll() to periodically check if the data has changed and re-read it when it has. With reactive.poll(), you’re required to provide a function to check if the data has changed, which should be as efficient as possible. Unfortunately, there is no universal way to check if data has changed in a database, so you’ll need to implement this logic based on your specific use case. In general, you might check a “last updated” timestamp column:

import ibis

from shiny.express import render

from shiny import reactive

con = ibis.postgres.connect(user="", password="", host="", port=, database="")

table = con.table("tablename")

def check_last_updated():

return table.last_updated.max().execute()

# Every 5 seconds, check if the max timestamp has changed

@reactive.poll(check_last_updated, interval_secs=5)

def data():

return table.execute()

@render.data_frame

def result():

return data()Since Polars is so good at (lazily) reading data from files, it’s tempting to export from a database to a file. In this case, you could set up a reactive.poll() to save the database table as a file whenever it changes, and reactive.file_reader() to re-read the file when the file changes.

For a deeper dive into reactively reading “streaming” data, see the Streaming Data template.

What about saving data?

In some cases, you may need to save data from your application back to a database or other remote location. This is a more advanced use case, but Shiny provides tools to help with this scenario. In the next article, we’ll cover some strategies for persisting data from your Shiny app.

Footnotes

We’ll talk more about writing to data stores from Shiny in our article on Persistent Storage↩︎